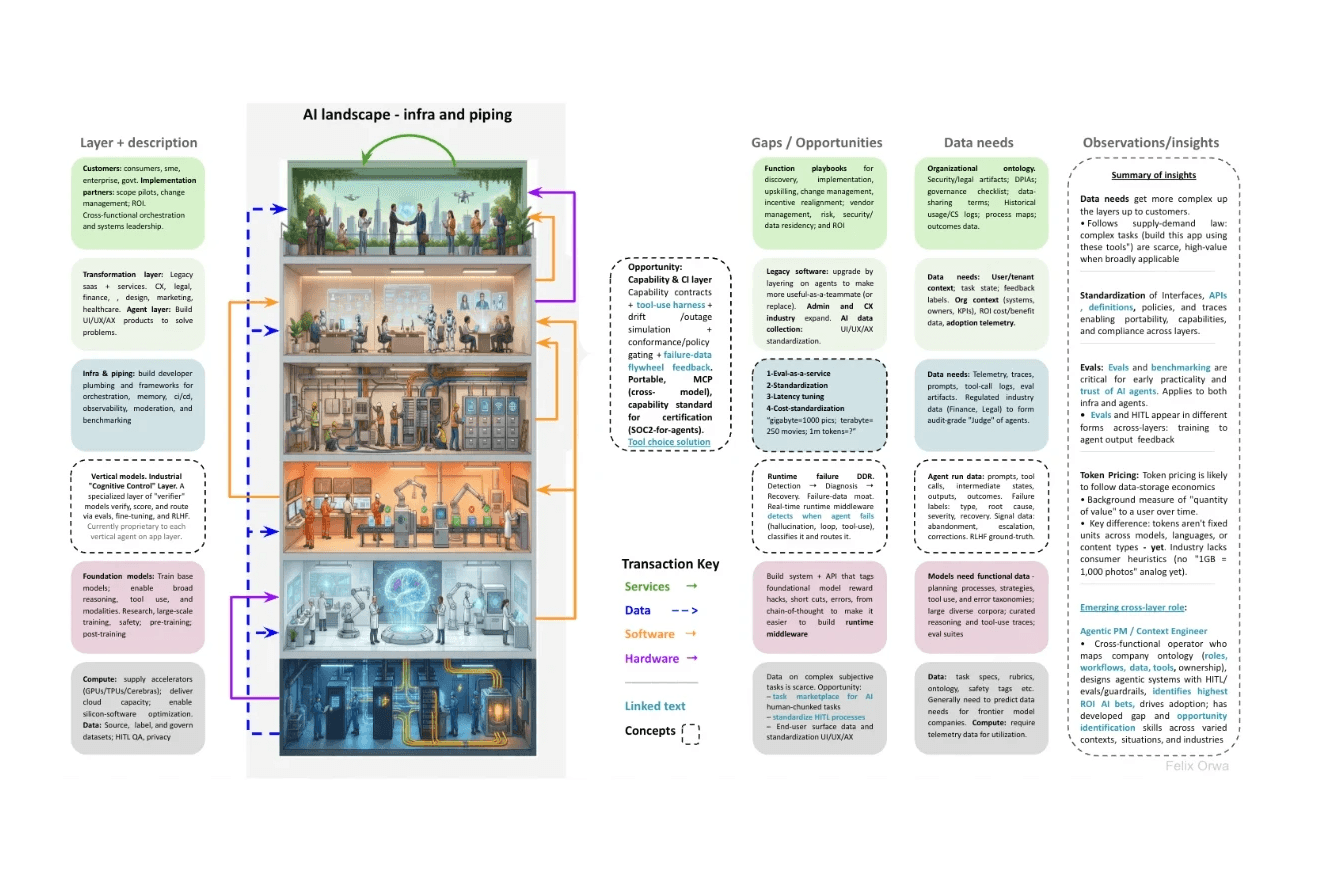

The AI layer cake - a visual map

Date

2025

Service

Industry decomposition

Client

Self

Project Overview

Background

AI is loud right now. New models. New tools. New platforms.

I've been curious about a simpler question: how does the system actually work?

So I mapped the AI industry into a layer cake. The AI layer cake is a front-to-back map of the AI stack. From chips and models to agents, workflows, and end users; showing where data, evals, and incentives create leverage.

Every level of the cake relies on the foundation below it to support the people and businesses at the top. Bottom layers sell technology up to the software makers, while the customers at the top send money and usage data back down to improve the system.

What this map helped me see:

Where the real choke points live. Runtime accuracy is it: tools, orchestration, error detection, evals, integration, recovery, and guardrails.

Where value is captured vs. created.

Data needs get more complex up the layers. Data follows the laws of supply-demand: complex tasks (build this app using these tools") are scarce, and get highly-valuable when broadly applicable i.e. high demand.

What will commoditize. Tokens will feel like bytes over time following the data-storage economics arc. Key difference: tokens aren't standardized units across models, languages, or content types - yet. There are no heuristics for quantity of value per ~month (no "1GB = 1,000 photos" yet). This makes outcome-based pricing popular

How to read it fast:

Read it like a supply chain up the cake: Compute + energy + data → models → tooling/plumbing → apps → users/businesses/govt. Labels on the left, characteristics and take aways on the right.

Ignore logos first. Focus on the left to understand the job of the layer.

Use the right-side notes for gaps + opportunities.

If you want the punchline, start with the summary of insights.

Key Highlights

The 5 things that jumped out while mapping

Token Pricing:

• Token pricing will likely follow the data-storage economics arc

• AI is a new method to store, process, and interact with our data in a "more useful" way.

• Key difference: tokens aren't standardized units across models, languages, or content types - yet. The industry lacks consumer heuristics for quantity of value per ~month (no "1GB = 1,000 photos, 1T = 250 movies" analog yet).

• This makes outcome-based pricing popular.

Data

• The data needs get more complex up the layers up towards customers.

• Data follows the laws of supply-demand: complex tasks (build this app using these tools") are scarce, and get highly-valuable when broadly applicable i.e. high demand.

Standardization

• Standardization of interfaces, APIs, definitions, policies, traces, and tokens remains a large opportunity. Enabling portability, capabilities, and compliance across layers.

Evals

• Evals and benchmarking are critical for early practicality and trust of AI agents. Applies to both infra and agents, not only models where the focus is today. A vertical opportunity.

• Evals and HITL appear in different forms across-layers: from training to agent output feedback

Emerging cross-layer role: Agentic PM / Context Engineer

• Cross-functional operator who maps company ontology (roles, workflows, data, tools, ownership), designs agentic systems with HITL/evals/guardrails, identifies highest ROI AI bets, drives adoption; has developed gap and opportunity identification skills across varied contexts, situations, and industries

Why I built it

I build mental maps to understand systems.

My past work was tech + logistics + finance. We extracted valuable financial data through the messy reality of verification, systems, people, and logistics risk. Then turned that into real-time insights for banking decisions with real money attached.

AI is different in surface area, but familiar in mechanics:

• capture the truth → structure it → evaluate it → decide → deliver

Small disclaimer: This is a functional model that will evolve over time.

If you find this interesting, here's feedback I'm curious about:

Which layer would you redraw?

What did I misunderstand about the flow of money, data, or trust?

Which gap identified do you think might be false; and which one is inevitable?